AI Privacy

2024-25 Weekly Discussion Session I

Discussion Theme: AI Privacy

Artificial Intelligence (AI) promises numerous advancements and conveniences. However, the widespread use of AI technologies raises significant concerns about privacy. From data collection to inference, AI technologies can potentially infringe on individual privacy, posing ethical and legal challenges. This discussion fosters a deeper understanding of these issues, focusing on the balance between innovation and privacy, ethical considerations, and current research advancements in the field of AI privacy.

Meeting Details

Time:

Every Sunday, 3:00 PM - 4:30 PM EST

Zoom Link:

The Zoom link will be provided to Boil-MLC members via Discord, WeChat or email.

Language:

The discussion will be conducted in English.

Recording:

All sessions will be recorded and made available post-meeting on this page for members to review.

Reading:

Feel free to pick one or two papers provided in the reading list for each week and join the discussion with your ideas and thoughts.

Syllabus

Week 1: Introduction to AI Privacy

- Overview of AI privacy issues focusing on Differentially Private.

- Ethical implications.

- Key challenges and legal perspectives.

Reading:

Week 2: AI Backdoors

- Understanding backdoors in AI systems.

- Methods to detect and prevent backdoors.

- Real-world implications.

Reading:

- BadNL: Backdoor Attacks against NLP Models with Semantic-preserving Improvements

- TrojLLM: A Black-box Trojan Prompt Attack on Large Language Models

- Poisoning and backdooring contrastive learning

- BadEncoder: Backdoor Attacks to Pre-trained Encoders in Self-Supervised Learning

- Distribution preserving backdoor attack in self-supervised learning

- Dual-Key Multimodal Backdoors for Visual Question Answering

- BACKDOORL: Backdoor Attack against Competitive Reinforcement Learning

- Backdooring Neural Code Search

- Towards reliable and efficient backdoor trigger inversion via decoupling benign features

- Elijah: Eliminating backdoors injected in diffusion models via distribution shift

- ODSCAN: Backdoor Scanning for Object Detection Models

- Unicorn: A unified backdoor trigger inversion framework

- Detecting backdoors in pre-trained encoders

- Detecting AI trojans using meta neural analysis

- ASSET: Robust backdoor data detection across a multiplicity of deep learning paradigms

- Reconstructive neuron pruning for backdoor defense

- UNIT: Backdoor Mitigation via Automated Neural Distribution Tightening

- Exploring the Orthogonality and Linearity of Backdoor Attacks

Week 3: LLM Jailbreaking

- Techniques and challenges in LLM jailbreaking.

- Security concerns and mitigation strategies.

- Case studies and examples.

Reading:

- “Do Anything Now”: Characterizing and Evaluating In-The-Wild Jailbreak Prompts on Large Language Models

- Tricking LLMs into Disobedience: Understanding, Analyzing, and Preventing Jailbreaks

- Jailbreaking ChatGPT via Prompt Engineering: An Empirical Study (NDSS 2024)

- Survey of Vulnerabilities in Large Language Models Revealed by Adversarial Attacks

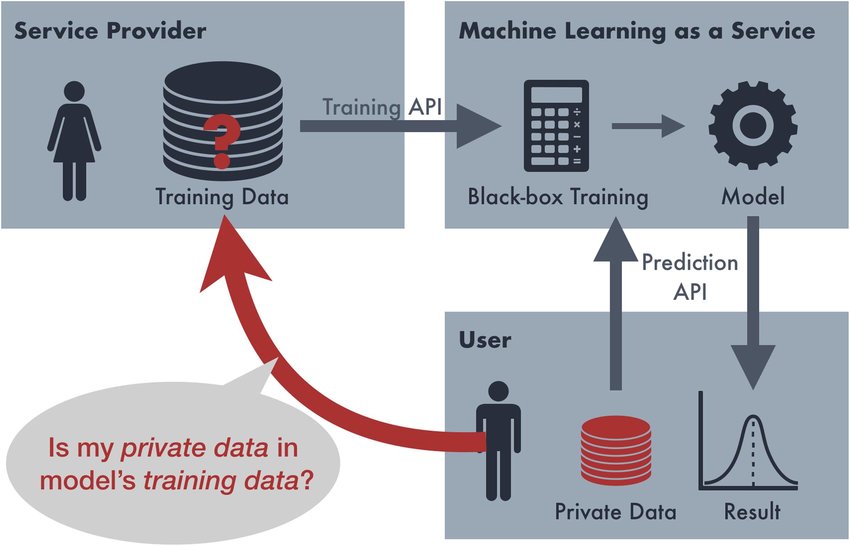

Week 4: Membership Inference Attacks on Classification Models

- Understanding membership inference attacks.

- Privacy risks associated with classification models.

- Defense mechanisms.

Reading:

- Membership Inference Attacks on Machine Learning: A Survey

- Membership Inference Attacks Against Machine Learning Models

- Membership Inference Attacks From First Principles

- MIST: Defending Against Membership Inference Attacks Through Membership-Invariant Subspace Training

- Efficient Privacy Auditing in Federated Learning

Week 5: Machine Unlearning

- Concept and necessity of machine unlearning.

- Techniques and challenges.

- Potential applications and future directions.

Reading:

- Learning to Unlearn: Instance-wise Unlearning for Pre-trained Classifiers

- Fast Machine Unlearning Without Retraining Through Selective Synaptic Dampening

- Separate the Wheat from the Chaff: Model Deficiency Unlearning via Parameter-Efficient Module Operation

- Towards Effective and General Graph Unlearning via Mutual Evolution

- Backdoor Attacks via Machine Unlearning

- RRL: Recommendation Reverse Learning

- SIFU: Sequential Informed Federated Unlearning for Efficient and Provable Client Unlearning in Federated Optimization

- Forgetting User Preference in Recommendation Systems with Label-Flipping

- FedCIO: Efficient Exact Federated Unlearning with Clustering, Isolation, and One-shot Aggregation

- Machine Unlearning for Image-to-Image Generative Models

- Label-Agnostic Forgetting: A Supervision-Free Unlearning in Deep Models

- Ring-A-Bell! How Reliable are Concept Removal Methods for Diffusion Models?

- A Unified and General Framework for Continual Learning

- Rethinking Adversarial Robustness in the Context of the Right to be Forgotten

- Machine Unlearning in Learned Databases: An Experimental Analysis

- CaMU: Disentangling Causal Effects in Deep Model Unlearning

- Learn To Unlearn for Deep Neural Networks: Minimizing Unlearning Interference With Gradient Projection

- On the Effectiveness of Unlearning in Session-Based Recommendation

- Graph Unlearning with Efficient Partial Retraining

- ERASER: Machine Unlearning in MLaaS via an Inference Serving-Aware Approach

- Brainwash: A Poisoning Attack to Forget in Continual Learning

- Challenging Forgets: Unveiling the Worst-Case Forget Sets in Machine Unlearning

- Scissorhands: Scrub Data Influence via Connection Sensitivity in Networks

- To Generate or Not? Safety-Driven Unlearned Diffusion Models Are Still Easy To Generate Unsafe Images … For Now

- How to Forget Clients in Federated Online Learning to Rank?

- SalUn: Empowering Machine Unlearning via Gradient-based Weight Saliency in Both Image Classification and Generation

- Tangent Transformers for Composition, Privacy and Removal

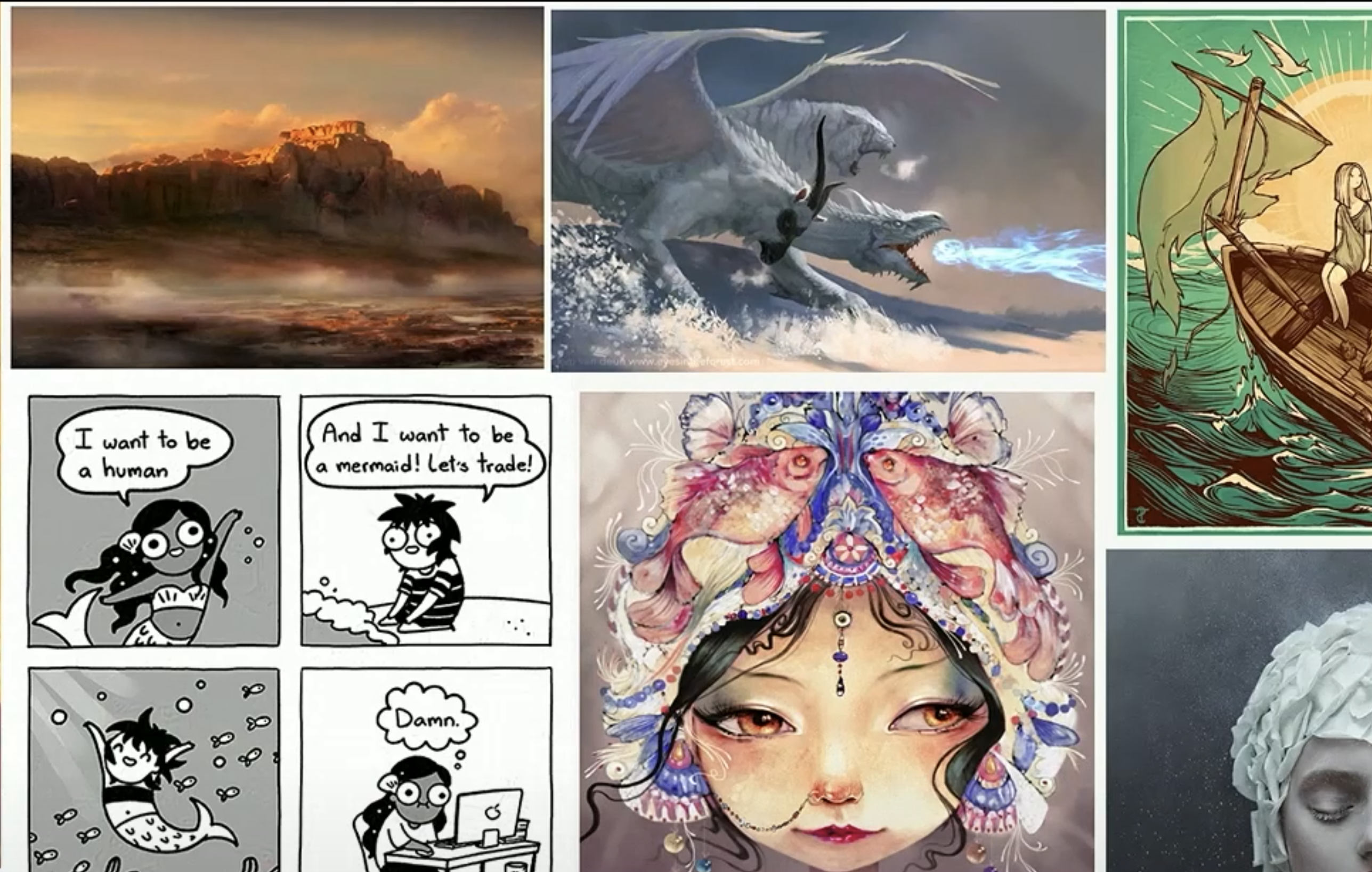

Week 6: Alignment and Generated Content Detection

- Ensuring AI alignment with human values.

- Challenges in detecting AI-generated content.

- Ethical considerations.

Reading:

- Universal and Transferable Adversarial Attacks on Aligned Language Models

- On Large Language Models’ Resilience to Coercive Interrogation

- AutoDAN: Generating Stealthy Jailbreak Prompts on Aligned Large Language Models

- How johnny can persuade llms to jailbreak them: Rethinking persuasion to challenge ai safety by humanizing llms

- CNN-Generated Images Are Surprisingly Easy to Spot… for Now

- Leveraging Frequency Analysis for Deep Fake Image Recognition

- Towards Universal Fake Image Detectors That Generalize Across Generative Models

- DIRE for Diffusion-Generated Image Detection

- DRCT: Diffusion Reconstruction Contrastive Training towards Universal Detection of Diffusion Generated Images

- GLTR: Statistical Detection and Visualization of Generated Text

- DetectGPT: Zero-shot Machine-Generated Text Detection using Probability Curvature

- RADAR: Robust Ai-Text Detection via Adversarial Learning

- DetectLLM: Leveraging Log Rank Information for Zero-Shot Detection of Machine-Generated Text

- Fast-DetectGPT: Efficient Zero-Shot Detection of Machine-Generated Text via Conditional Probability Curvature

- Raidar: geneRative AI Detection viA Rewriting

- Spotting LLMs With Binoculars: Zero-Shot Detection of Machine-Generated Text

Week 7 and Beyond: Paper Readings and Open Discussion

- After the initial weeks, we will transition to reading and discussing seminal papers and emerging research in AI privacy.

- Each week, members will be assigned a paper to read followed by an open discussion.

Policies

- Attendance: Only Boil-MLC members can attend live sessions.

- Participation: Active participation is encouraged to foster a collaborative learning environment.

- Recording Access: Recordings of sessions will be posted on this page after each meeting for those who missed the live discussion.

- Confidentiality: Discussions are for educational purposes only. Sharing of sensitive or proprietary information is discouraged.

- Respect and Inclusivity: All participants are expected to maintain a respectful and inclusive atmosphere during discussions.

Join Us

For a comprehensive understanding of AI privacy and to engage with a passionate community, join our weekly discussions. Stay informed, stay ethical, and contribute to the future of AI.

Credits

- https://www.researchgate.net/figure/Membership-inference-attack_fig1_339897331

- https://www.youtube.com/watch?v=WpHkTVb3CUg